If you’re like most, you’re excited by the promise of AI in software. The dream was simple: let machines handle the grunt work so we could focus on the creative, high-impact problems. Finally, an end to boilerplate and a little more time to think and be creative.

But the reality has been different. The industry got swept up in an AI gold rush, and the conversation became dominated by a single idea: generating more code, faster. We got incredibly good at accelerating the first mile of the software lifecycle.

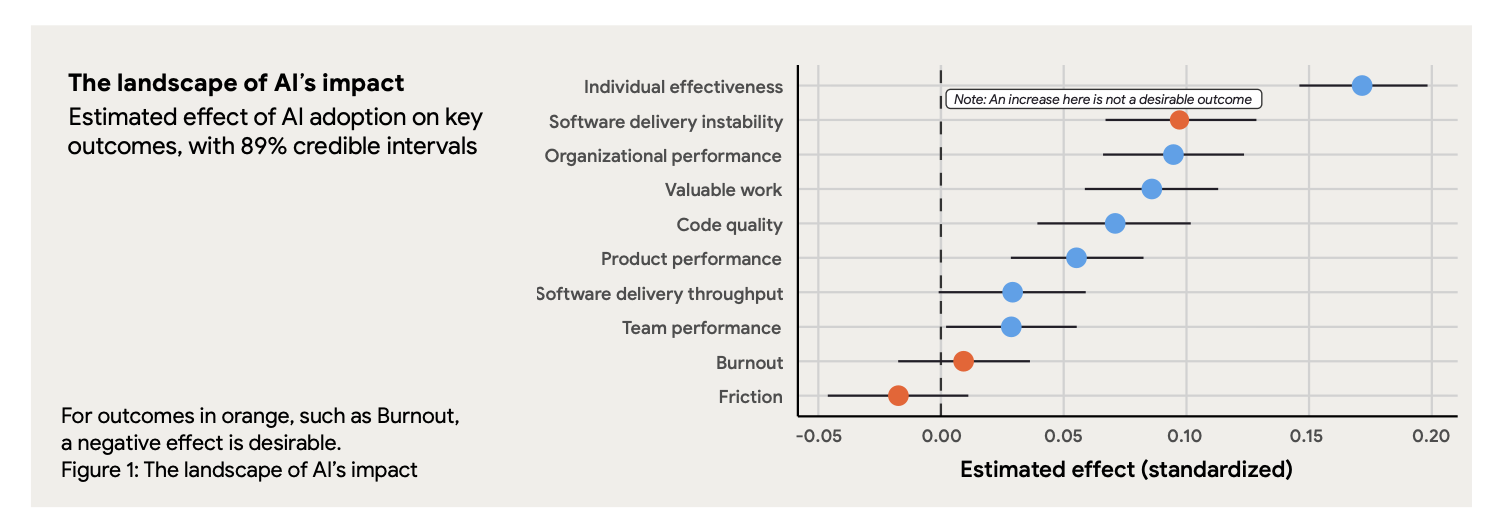

The 2025 Google Cloud DORA report helps paint a more complete picture of what this means to software organizations. “The report confirms that AI is boosting productivity for many developers. More than one-third of respondents experienced” moderate” to “extreme” productivity increases due to AI.” And yet, “AI adoption may negatively impact software delivery performance. As AI adoption increased, it was accompanied by an estimated decrease in delivery throughput by 1.5%, and an estimated reduction in delivery stability by 7.2%.“

“On the contrary, instability still has significant detrimental effects on crucial outcomes like product performance and burnout, which can ultimately negate any perceived gains in throughput.”

This is the Velocity Trap. We're celebrating how fast we can write code, while our overall systems are getting slower and less reliable. The time saved in creation is being repaid, with interest, in the operational effort required to debug and stabilize these increasingly complex systems. The real crisis isn't how we write software; it’s how we run it.

The System-Level Problem

The issue isn’t the AI-generated code itself, but the mismatch between the speed of code creation and the capacity of the rest of the development systems. As the DORA report, drawing on W. Edwards Deming’s systems theory, notes, “improving one part does not guarantee better outcomes overall.”

This is exactly what we're seeing. Developers now write code faster, but as the report states, “the overall pace of delivery is unlikely to change unless the surrounding workflows [like testing, review, integration] are updated.” Organizations that fail to evolve those surrounding systems are seeing limited returns.

A good analogy is that we’ve built a six-lane superhighway for the development function that funnels directly into a two-lane bridge for operations. The "Dev" side is producing code at an incredible rate, but this flood of new complexity slams right into the processes that ensure quality and reliability. The pull request queues grow longer, the CI/CD pipelines slow down, and the cognitive load on the entire team becomes unsustainable. We've mistaken coding speed for delivery velocity.

Drowning in Comprehension Debt

The fallout from this bottleneck is most painful during a 3 AM page for a production incident caused by AI-generated code that no one on the call fully understands.

This is the effect of “comprehension debt.” Before AI, a significant amount of an engineer's time was spent thinking and designing, not just typing. The cognitive load was front-loaded. With AI-generated code, this cognitive load gets punted to later stages. In the best case, some understanding is regained during the code review process. In the worst case, that understanding is only forced upon us when things break.

It’s like being handed the keys to a legacy system where the original authors have all left the company. During an incident, you’re not just looking for a bug; you’re trying to understand the fundamental logic of code that no human on your team actually wrote. This is why Mean Time to Resolution (MTTR) skyrockets. This is also how operational debt quietly accumulates. The AI might generate code that passes unit tests but introduces subtle dependencies that make the system harder to monitor and predict. We’re building systems that are functional but not resilient.

The Guardians at the Gate

In response, a second wave of AI tools has emerged: the “Code Integrity Guardians.” These are tools for automated code review, specialized testing, and security scanning. They act as AI-powered gatekeepers, trying to ensure that the code hitting production is correct and secure.

This is a necessary step, but these tools are largely insufficient because they focus on functional correctness, not operational readiness. They can tell you if the code works in a sterile test environment, but they can't predict how it will behave under the chaotic pressures of production. They don’t see the issues of performance, scalability, and reliability that are so critical.

These guardians check the code before it ships, but their job ends there. They can’t help when a cascading failure takes down your service, perform a complex rollback, or find the root cause of a performance degradation in a distributed system. They guard the city walls, but they don't help you with the fire inside.

With AI Autonomy, Who’s on Call at 3 AM?

This brings us to the third wave of AI: autonomy. While agents like Cognition AI’s Devin, Caude Code, or Openai codex are an evolution of the first wave of code generation, they introduce a critical change by moving from AI assisting developers to AI replacing entire workflows. This leap from assistance to full automation makes the operational challenge exponentially harder.

It forces the question the industry has been avoiding: If autonomous agents write, test, and deploy most of the code, who operates it?

The blast radius of a mistake changes dramatically. A copilot’s error needs a human to approve and merge it. An autonomous agent can commit, test, and deploy its own mistake before a human is even aware. A future with thousands of AI agents generating software is an operational nightmare unless we have an equally powerful AI backbone to manage it. This is the dawn of “AgentOps,” the discipline of deploying, monitoring, and fixing autonomous systems when they fail.

Build for Sustainability, Not Just for Speed

Our industry’s obsession with coding velocity is a short-term game with long-term consequences. In our rush to generate code faster, we are creating fragile, unmanageable software ecosystems and burning out our teams.

As we move toward a future of software autonomy, the platforms that ensure operational reliability will become the most critical part of the stack. The next great innovation won’t just be about building faster; it will be about building smarter. To realize the full potential of today's powerful code generators, we must pair them with equally robust operational tools. True innovation comes from combining development speed with systemic stability—a combination that lets us build great products and still sleep through the night.

This is the challenge that drives everything we do at Bacca.AI. We believe that true velocity comes from operational stability, not just coding speed. By building tools that help teams manage and understand their systems, we provide the reliable operator you need to complement your code generator. We aim to solve the operational crisis at the heart of the AI revolution, helping you realize the full potential of your tools.

Eric Lu, Founder & CEO

we will contact you soon